Optimizing Vision-Language Models for Production: A Deep Dive into Quantization and Pruning

Published:

Optimizing Vision-Language Models for Production: A Deep Dive into Quantization and Pruning

TL;DR

I benchmarked popular Vision-Language Models (VLMs) like LLaVA, Qwen-VL, and PaliGemma to see how they handle quantization and pruning. The takeaway: 4-bit quantization is a no-brainer for most use cases, offering massive memory savings with minimal quality loss. For those needing speed improvements, GLU pruning (removing MLP neurons) achieved the best latency reductions, delivering significantly faster inference than head pruning or L1 methods, though at some cost to quality preservation.

Introduction

Vision-Language Models (VLMs) like LLaVA, Qwen-VL, and PaliGemma are transforming how machines understand the world. But deploying these behemoths in production—especially on edge devices or latency-sensitive applications—is a massive challenge.

In this project, I set out to answer a critical question: How much can I compress these models without breaking them?

I conducted an extensive benchmark of popular VLMs, experimenting with quantization (4-bit, 8-bit) and structural pruning to find the sweet spot between performance and efficiency. Here’s what I found.

Part 1: The Baseline Benchmark

I started by establishing a baseline. I took top contenders:

- BLIP-2: The reliable veteran.

- Qwen-VL: A powerhouse from Alibaba.

- PaliGemma: Google’s efficient, open VLM.

- LLaVA: The robust open-source standard (specifically LLaVA-1.5-7b).

The Metrics That Matter

I didn’t just look at accuracy. For production, you need to balance three things:

- Quality: I defined a composite “Quality Score” as the average of three metrics:

(METEOR + ROUGE-1 + BERTScore-F1) / 3. This gives a balanced view of n-gram overlap and semantic similarity. - Latency: How long does it take to get an answer?

- VRAM: Can it fit on a consumer GPU?

Quantization: The “Free” Lunch?

I ran everything at FP16 (half-precision), 8-bit, and 4-bit quantization.

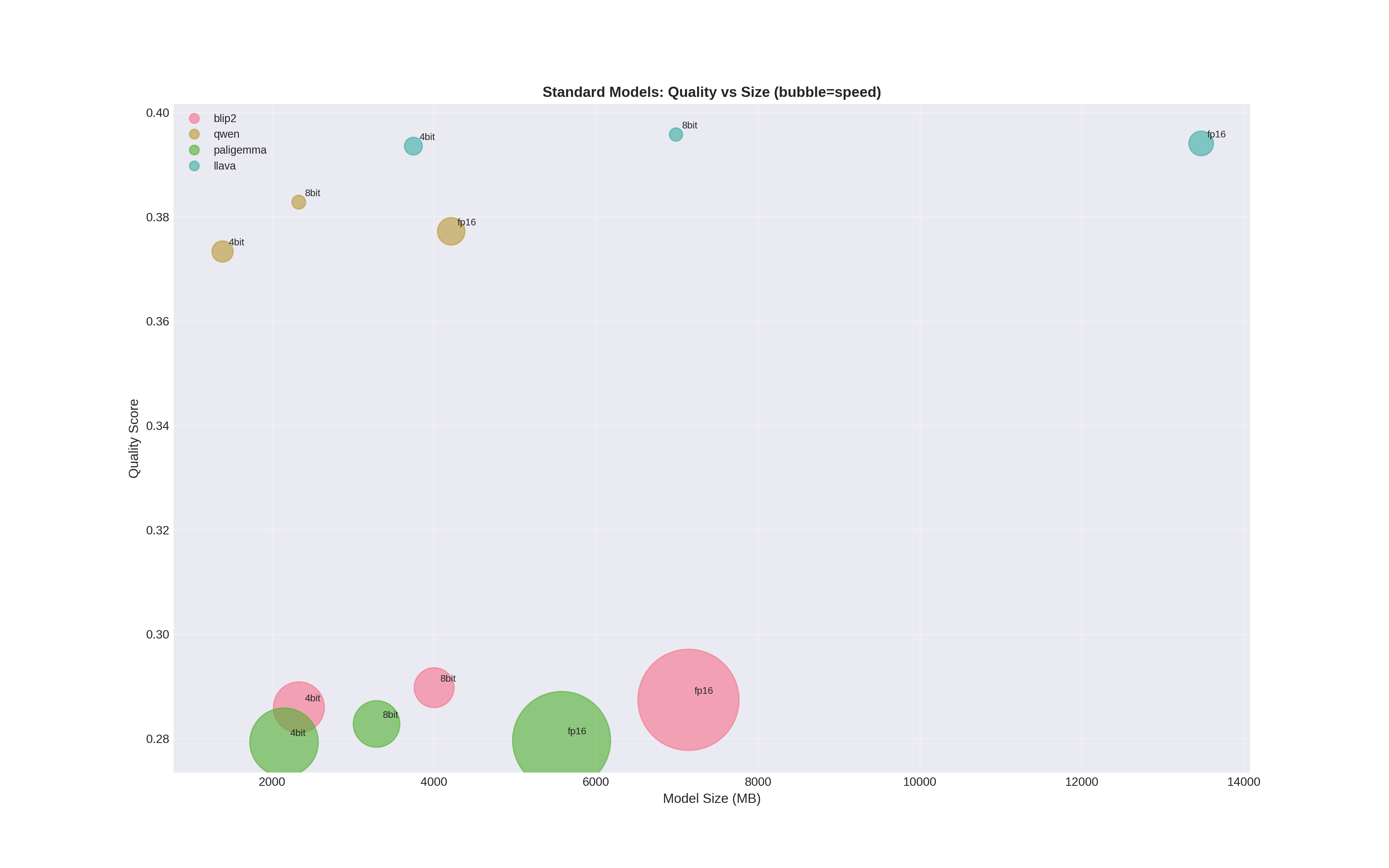

The Result? Surprisingly, 4-bit quantization is almost always worth it.

- Size: Massive reduction (often 3-4x smaller than FP16).

- Speed: Significant latency improvements on memory-bound systems.

- Quality: The drop in semantic understanding (BERTScore) was negligible for most tasks, though some nuance is lost in complex reasoning.

Part 2: Surgical Precision with Pruning

Quantization is great, but what if we want to physically remove parts of the model? I focused my pruning efforts on LLaVA, applying three structural pruning techniques:

- GLU Pruning: Trimming the Feed-Forward Networks (MLPs). I followed the approach described by Pere Martra in his article Exploring GLU expansion ratios: Structured pruning in Llama-3.2 models. This method calculates neuron pair importance based on the Maximum Absolute Weight of the

gate_projandup_projlayers. I pruned the least important neuron pairs and resized the intermediate layers accordingly. - Head Pruning: Removing attention heads that contribute least to the output. To select which heads to prune, I calculated the L2 norm of the

o_proj(output projection) weights for each head. I then averaged these norms across all layers and removed the specific head indices that had the lowest average importance globally. This ensures we remove the “weakest” heads consistently across the model structure. - L1 Pruning: Magnitude-based pruning of weights. Note: Standard PyTorch L1 pruning only applies a mask (setting weights to zero) and does not physically reduce the model size or architecture. It serves as a baseline for comparison.

The Findings

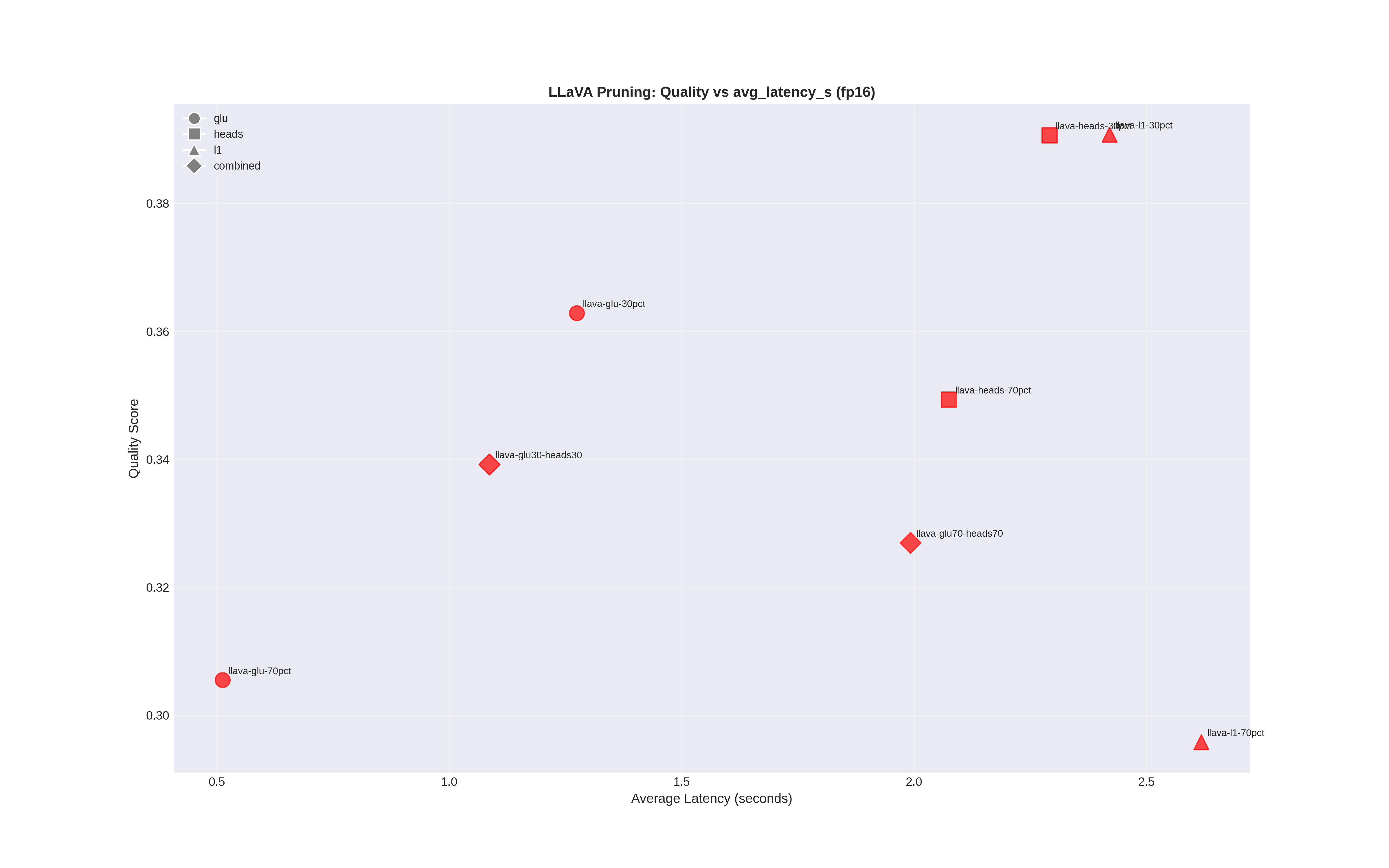

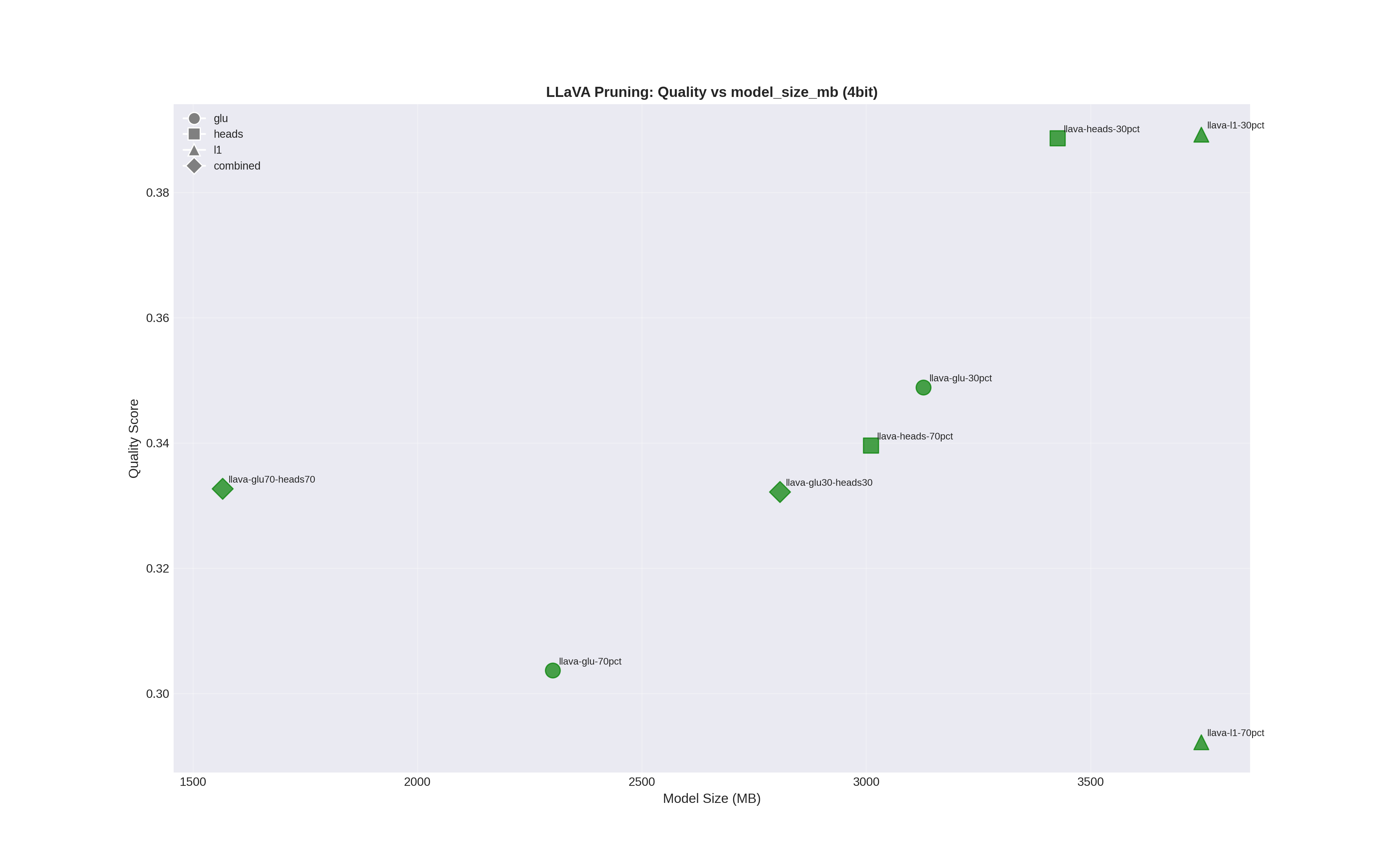

I tested pruning intensities of 30% and 70%.

- 30% Pruning: This is the safe zone. Most pruning methods retained >90% of their original quality. Head pruning performed best for quality preservation (99% retention at fp16), while GLU pruning delivered the best latency improvements (1.27s vs. 2.29s for heads).

- 70% Pruning: Quality degradation becomes significant. Head pruning retained quality better (89% vs. 77% for GLU), but GLU pruning achieved dramatically lower latency (0.51s vs. 2.08s), making it the clear winner for speed-critical applications willing to trade some quality.

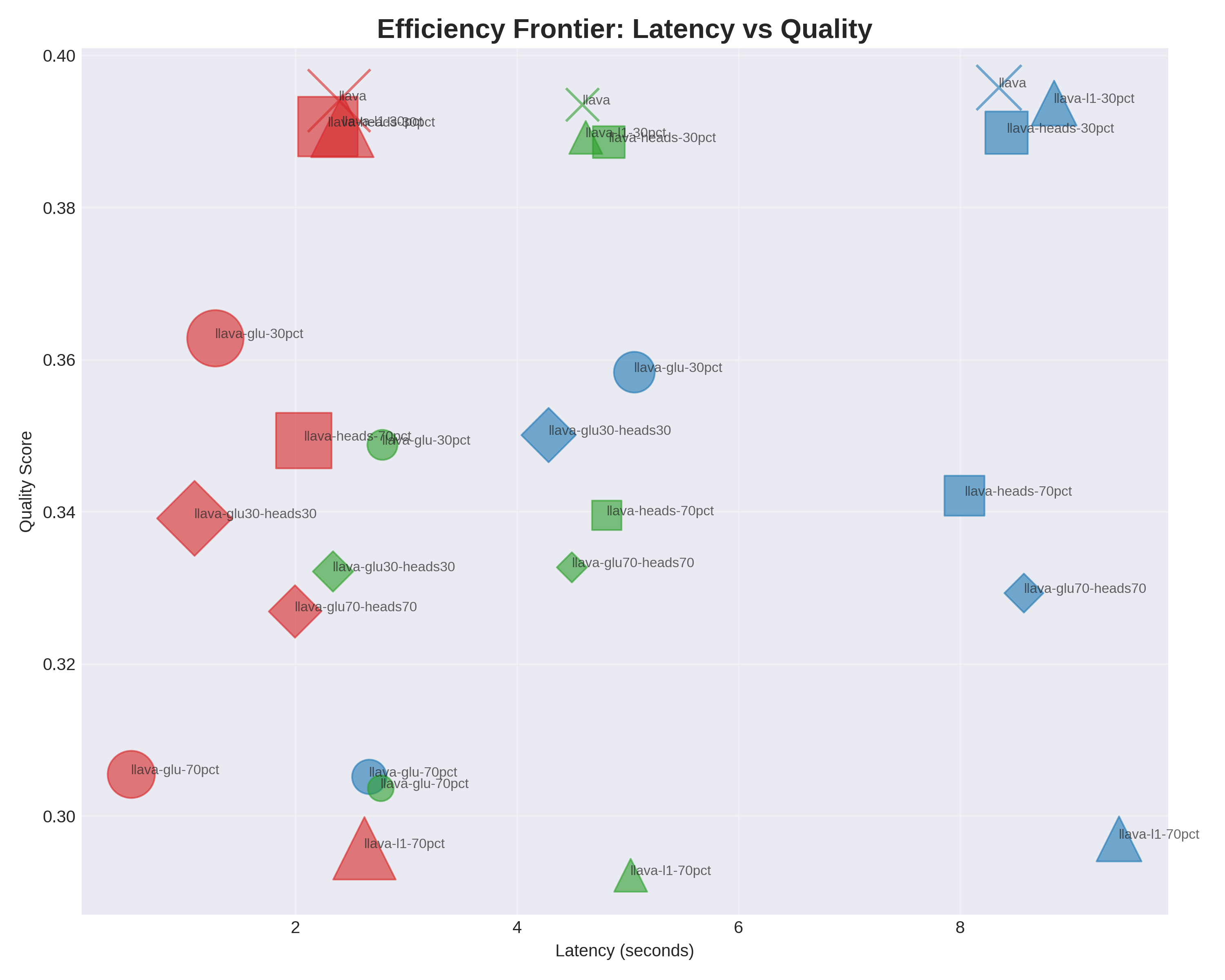

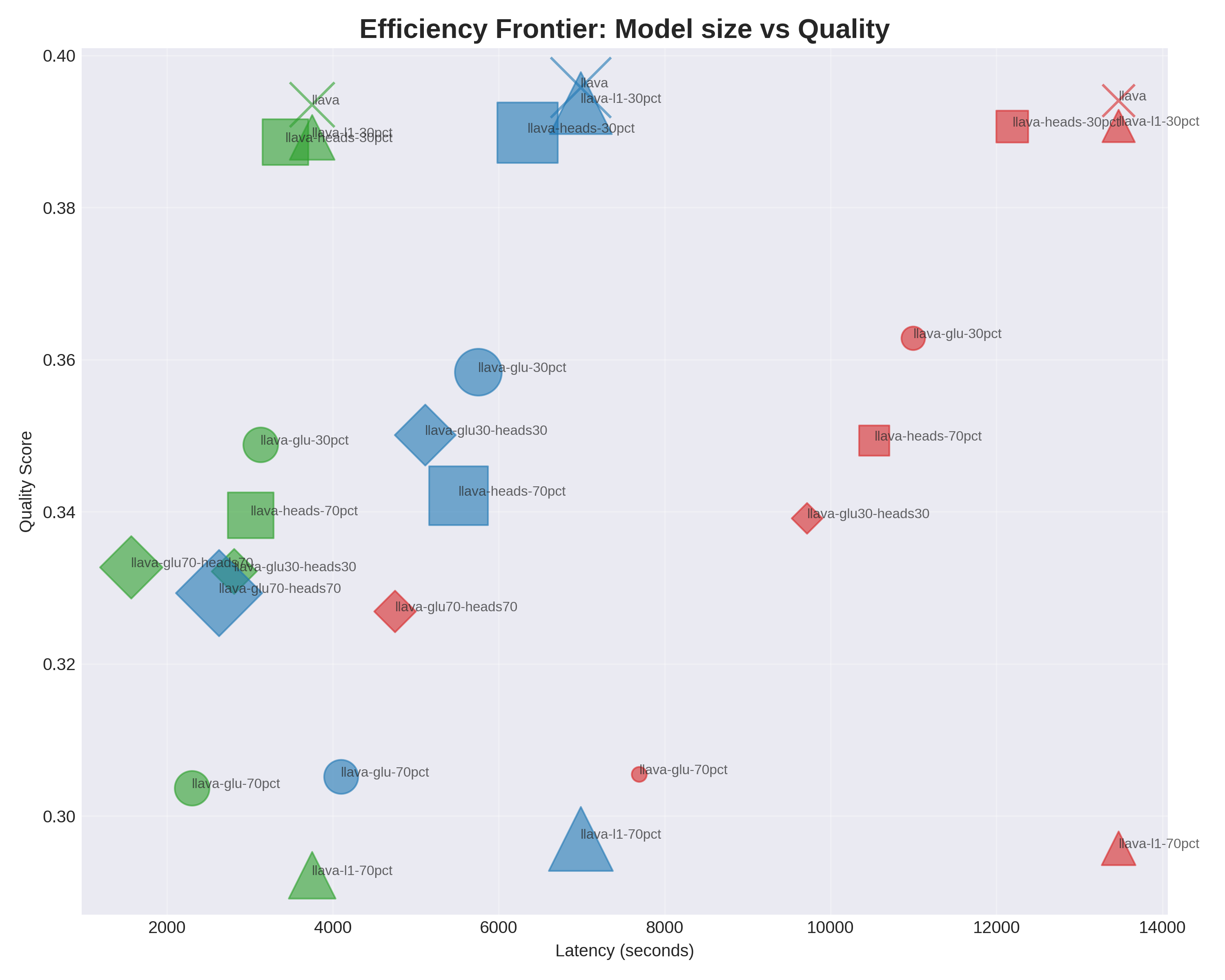

The Efficiency Frontier

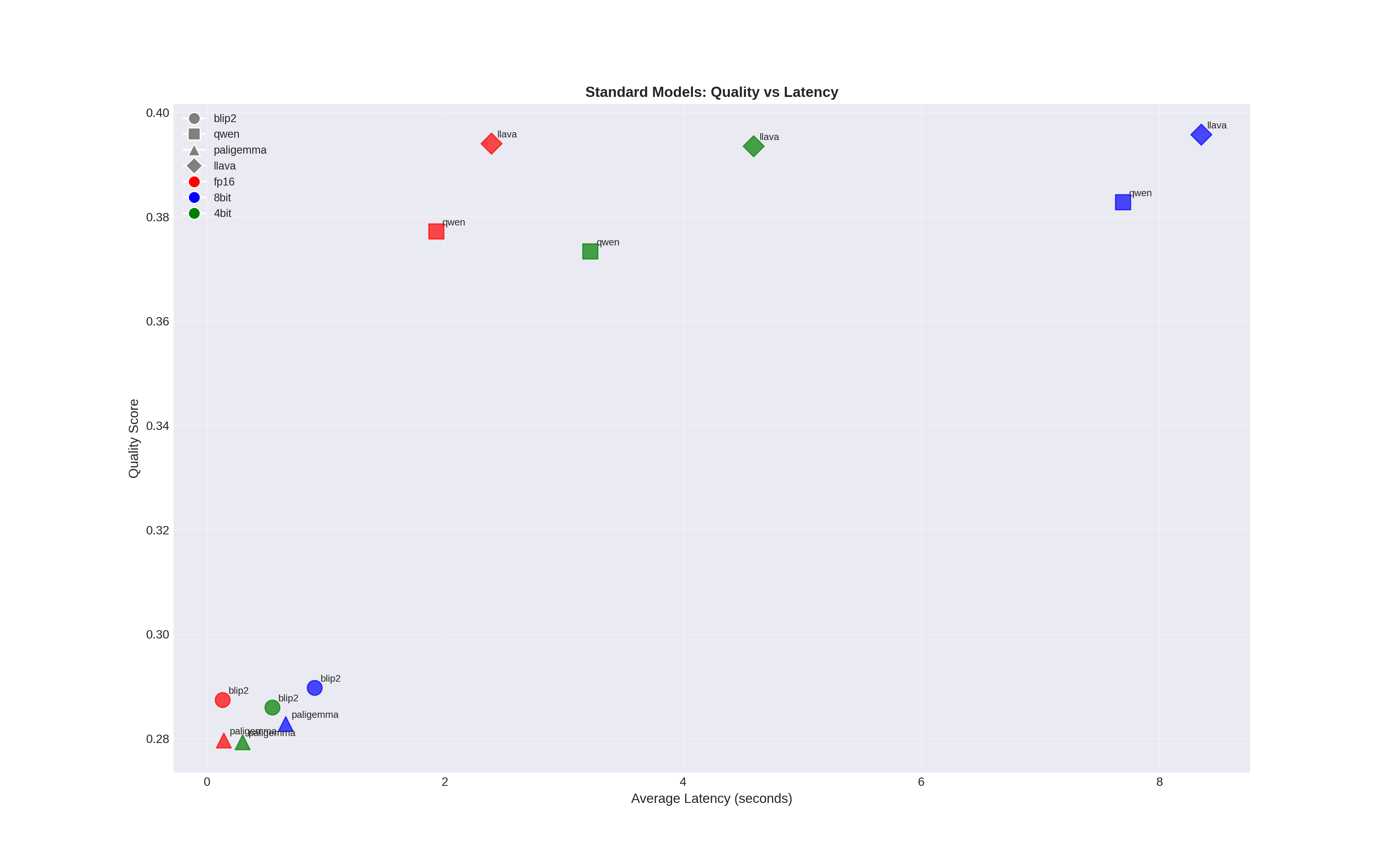

When I plot Quality vs. Latency, a clear “Pareto Frontier” emerges:

- Best for Quality Preservation: LLaVA (8-bit) or Qwen-VL achieve the highest quality scores while offering reasonable memory footprints.

- Best for Speed: PaliGemma’s architecture delivers sub-0.3s latency out-of-the-box, while heavily pruned LLaVA variants (70% GLU) can reach 0.51s.

- The Sweet Spot: LLaVA with 30% GLU pruning strikes an excellent balance—maintaining 92% of baseline quality while achieving 47% latency reduction.

Conclusion

After extensive benchmarking across quantization levels and pruning strategies, here are the optimal configurations for different use cases:

Recommended Configurations

For balanced performance (most use cases):

- LLaVA with 30% GLU pruning + fp16: Quality score 0.363 (~8% drop from baseline), 11GB VRAM, 1.27s latency

- This delivers significant speed improvements (47% faster) and moderate size reduction (18% smaller) while maintaining good quality

For deployment under strict memory constraints:

- LLaVA with combined 30% GLU+Heads pruning + 4-bit: Quality score 0.332, 2.8GB VRAM, 2.34s latency

- Achieves 79% size reduction from baseline with acceptable quality and comparable latency

For maximum speed (real-time applications):

- LLaVA with 70% GLU pruning + fp16: Quality score 0.305, 7.7GB VRAM, 0.51s latency

- Fastest inference (78% faster than baseline), though with noticeable quality degradation

When quality cannot be compromised:

- Standard LLaVA with 8-bit quantization: Quality score 0.396 (highest), 7GB VRAM, 8.35s latency

- Nearly preserves full quality while halving memory footprint

For naturally efficient architectures:

- PaliGemma (4-bit): Quality score 0.793 (BERTScore-F1), 2.1GB VRAM, 0.30s latency

- Excellent out-of-the-box efficiency without requiring pruning

Key Takeaways

4-bit quantization is essential for memory-constrained deployments, offering 3-4x size reduction with minimal quality loss across all models tested.

GLU pruning outperforms head pruning for speed gains but sacrifices more quality. Choose based on your speed-vs-quality tolerance.

Combined pruning strategies (GLU + heads) can achieve extreme compression (>75%) but require careful validation for your specific use case.

Quantization and pruning compound effectively: combining 30% GLU pruning with 4-bit quantization yields both speed and size benefits.

The code for this benchmark and analysis tools are open-source. I encourage you to run these tests on your own hardware and datasets to find the optimal configuration for your specific requirements. code: —

Supplementary materials - All experimental data

| model | quantization | load_time_s | model_size_mb | model_parameters | avg_latency_s | mean_answer_length | meteor | sacrebleu | rouge1 | rouge2 | rougeL | bertscore_precision | bertscore_recall | bertscore_f1 | perplexity |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| blip2 | fp16 | 7.602144718170166 | 7142.56640625 | 3744761856 | 0.1320900297164917 | 28.04 | 0.018088865409510595 | 1.4987195485025522e-16 | 0.045506439517707595 | 0.0053422581744783995 | 0.038009202381693044 | 0.8314800691604615 | 0.7689636087417603 | 0.7985913348197937 | 1722.0730457305908 |

| blip2 | 8bit | 12.488256931304932 | 4002.8349609375 | 3744761856 | 0.9038129615783691 | 32.94 | 0.020276920248514303 | 1.3905879240566683e-14 | 0.05044874898403187 | 0.006266350834083712 | 0.041361325637939914 | 0.8305673825740815 | 0.7696254563331604 | 0.7984777760505676 | 2200.681635570526 |

| blip2 | 4bit | 11.00309443473816 | 2332.6787109375 | 1117833216 | 0.5494526481628418 | 42.98 | 0.01783062503878422 | 5.855685979020813e-10 | 0.04537132758475405 | 0.004216360249786321 | 0.03680663389698398 | 0.82113618850708 | 0.7708536028862 | 0.7946808731555939 | 1047.3919509601592 |

| qwen | fp16 | 7.020942211151123 | 4213.3056640625 | 2208985600 | 1.9277745962142945 | 335.02 | 0.10993054444932251 | 0.3720241883491844 | 0.19768879336947226 | 0.036563209326158266 | 0.12799605201545022 | 0.8406179189682007 | 0.8090876615047455 | 0.8240412044525146 | 22.272868156433105 |

| qwen | 8bit | 8.26638126373291 | 2331.3056640625 | 2208985600 | 7.6948521614074705 | 343.24 | 0.11203280519057128 | 0.36751603806657385 | 0.21015944923838079 | 0.04002392949041178 | 0.1314479087875262 | 0.8440375483036041 | 0.8098956096172333 | 0.8262364733219146 | 23.40650001525879 |

| qwen | 4bit | 8.08268666267395 | 1390.3056640625 | 728920576 | 3.2218237686157227 | 288.86 | 0.101422208616655 | 0.22132129075693058 | 0.19153550743668704 | 0.038590052663084254 | 0.1223302942254395 | 0.8477976679801941 | 0.8079729056358338 | 0.8270943069458008 | 26.875355434417724 |

| paligemma | fp16 | 8.359464883804321 | 5576.069793701172 | 2923466480 | 0.14165289402008058 | 25.96 | 0.016579821058854867 | 1.0319519954176028e-18 | 0.03052889054094459 | 0.005841794891328831 | 0.022234087375283 | 0.8230057418346405 | 0.7633704209327697 | 0.7917155456542969 | 8039.198591613769 |

| paligemma | 8bit | 10.452388525009155 | 3291.792449951172 | 2923466480 | 0.6605536127090454 | 30.04 | 0.018663122973674316 | 2.4459824434252426e-16 | 0.035941548548866595 | 0.006703198335028694 | 0.025367866974745586 | 0.825508371591568 | 0.7650368535518646 | 0.7937322628498077 | 8757.097755203247 |

| paligemma | 4bit | 10.597950220108032 | 2149.653778076172 | 1127037680 | 0.30011572360992433 | 26.1 | 0.014684542830283021 | 8.14796562151317e-17 | 0.030211584959605645 | 0.007020676881237382 | 0.024555838901561426 | 0.824471858739853 | 0.7645712399482727 | 0.7929692757129669 | 9555.740810966492 |

| llava | fp16 | 9.678961515426636 | 13472.41796875 | 7063427072 | 2.3904533433914184 | 466.44 | 0.13410496279330691 | 0.793739829945288 | 0.22467789860216908 | 0.03742911675534406 | 0.13843078418099597 | 0.8376226270198822 | 0.810248212814331 | 0.8234326660633087 | 18.600049171447754 |

| llava | 8bit | 14.605058193206787 | 6988.41796875 | 7063427072 | 8.35051585674286 | 469.98 | 0.13523568693174248 | 0.8368236105617174 | 0.22786434296862468 | 0.039947193155572505 | 0.13889487329010033 | 0.8377220523357392 | 0.8116501641273498 | 0.8242302918434143 | 18.02626268386841 |

| llava | 4bit | 15.129019260406494 | 3746.41796875 | 1964201984 | 4.590229787826538 | 475.38 | 0.13398166024478267 | 0.822326797676632 | 0.2228098512523387 | 0.03692489599837828 | 0.1342474557596808 | 0.8385308110713958 | 0.8102889490127564 | 0.8238721835613251 | 17.987461776733397 |

| llava:llava-glu-30pct | fp16 | 109.55466318130493 | 10995.91796875 | 5765027840 | 1.274133381843567 | 289.0 | 0.09247034093265613 | 0.2074276645110611 | 0.17986305699146832 | 0.029673642269995398 | 0.11567635913746692 | 0.8310044538974762 | 0.8028841471672058 | 0.8162094938755036 | 58.54508932113647 |

| llava:llava-glu-30pct | 8bit | 11.688996315002441 | 5750.16796875 | 5765027840 | 5.058102688789368 | 269.8 | 0.08982753144038524 | 0.1555370210949341 | 0.1700666707631461 | 0.027830291795728337 | 0.11105831656854244 | 0.8304580187797547 | 0.8010348117351532 | 0.8151699328422546 | 79.98607303619384 |

| llava:llava-glu-30pct | 4bit | 11.580634117126465 | 3127.29296875 | 1639602176 | 2.780613160133362 | 266.02 | 0.08214684873413101 | 0.16092825963515747 | 0.15679374377242536 | 0.02537598146469953 | 0.10477357287331537 | 0.8183830499649047 | 0.7984587132930756 | 0.8075974905490875 | 62.494220795631406 |

| llava:llava-glu-70pct | fp16 | 70.04848670959473 | 7693.66796875 | 4033697792 | 0.5121692276000976 | 83.36 | 0.03359237442570786 | 3.969499966290017e-05 | 0.07884961411655475 | 0.013955976879917821 | 0.0610252005437985 | 0.8194228208065033 | 0.7900658679008484 | 0.8040149366855621 | 292.2443309402466 |

| llava:llava-glu-70pct | 8bit | 8.676433563232422 | 4099.04296875 | 4033697792 | 2.664307951927185 | 88.76 | 0.033901459334408496 | 1.975621959141903e-05 | 0.07980607857696695 | 0.014500651513840461 | 0.061562641432905095 | 0.8144959080219268 | 0.7905910658836365 | 0.8017778956890106 | 262.0123626327515 |

| llava:llava-glu-70pct | 4bit | 8.790015697479248 | 2301.73046875 | 1206769664 | 2.7659021520614626 | 89.46 | 0.032194032609498546 | 4.220230169002655e-05 | 0.07677673263881524 | 0.013625695140340042 | 0.05917475146625345 | 0.8153072285652161 | 0.7900698232650757 | 0.8019982469081879 | 307.9489730358124 |

| llava:llava-heads-30pct | fp16 | 126.7643768787384 | 12192.41796875 | 6392338432 | 2.291966366767883 | 462.78 | 0.13175933333641668 | 1.0310085514460912 | 0.22323539048704183 | 0.035491550117836015 | 0.1403586749782959 | 0.8289861094951629 | 0.8056354904174805 | 0.8168905663490296 | 18.637470779418944 |

| llava:llava-heads-30pct | 8bit | 12.418628692626953 | 6348.41796875 | 6392338432 | 8.422888956069947 | 451.74 | 0.1300136082221481 | 0.8576093635539881 | 0.22315393097739455 | 0.03577909482882442 | 0.1394693459770584 | 0.828596693277359 | 0.8050431561470032 | 0.8163898229598999 | 19.359551038742065 |

| llava:llava-heads-30pct | 4bit | 12.664499044418335 | 3426.41796875 | 1796429824 | 4.827850112915039 | 475.02 | 0.13030520349421354 | 0.8359141946868133 | 0.2208319893435613 | 0.0346697973647976 | 0.13966784707191998 | 0.8260036158561707 | 0.8043227851390838 | 0.8147271275520325 | 17.350276098251342 |

| llava:llava-heads-70pct | fp16 | 115.60983777046204 | 10528.41796875 | 5519923200 | 2.0753096675872804 | 376.0 | 0.09322253064393392 | 0.4206928537815414 | 0.17039584970770438 | 0.020298313673647102 | 0.11544773106785988 | 0.775820894241333 | 0.7941380822658539 | 0.7843946862220764 | 56.735428280830384 |

| llava:llava-heads-70pct | 8bit | 11.319885015487671 | 5516.41796875 | 5519923200 | 8.042877497673034 | 365.3 | 0.08197797886143807 | 0.3232869899012905 | 0.1606582441993548 | 0.021188297320593533 | 0.1091592039020361 | 0.7747329986095428 | 0.7938290703296661 | 0.7837847447395325 | 82.98422552585602 |

| llava:llava-heads-70pct | 4bit | 11.080994844436646 | 3010.41796875 | 1578326016 | 4.809139022827148 | 394.02 | 0.08559351063351033 | 0.3713369839571963 | 0.15437070647245574 | 0.0211236448402652 | 0.112378597108198 | 0.7662426018714905 | 0.7924101495742798 | 0.7787193262577057 | 85.45087451457977 |

| llava:llava-l1-30pct | fp16 | 161.1645531654358 | 13472.41796875 | 7063427072 | 2.420387420654297 | 496.16 | 0.132113159489529 | 0.717786815365339 | 0.2174576391877565 | 0.03485109234288537 | 0.1292096309762401 | 0.8364692723751068 | 0.8099189078807831 | 0.8227060163021087 | 19.572147998809815 |

| llava:llava-l1-30pct | 8bit | 13.554652452468872 | 6988.41796875 | 7063427072 | 8.847558307647706 | 500.18 | 0.1341743548003289 | 0.6944064815447887 | 0.2234660838008884 | 0.03275717225019906 | 0.12804364778392727 | 0.8372837388515473 | 0.810813101530075 | 0.8235775220394135 | 19.485825881958007 |

| llava:llava-l1-30pct | 4bit | 13.430489540100098 | 3746.41796875 | 1964201984 | 4.617258911132812 | 494.66 | 0.13005018879568944 | 0.6814558582687607 | 0.21560113607717313 | 0.03201750378629854 | 0.12788553233740813 | 0.8362867677211762 | 0.8088620805740356 | 0.8220530188083649 | 19.714609394073488 |

| llava:llava-l1-70pct | fp16 | 128.66919422149658 | 13472.41796875 | 7063427072 | 2.617942032814026 | 177.76 | 0.055637933761346764 | 0.2805705910602505 | 0.06584162939080723 | 0.012022585367567213 | 0.05152921043053048 | 0.7422366940975189 | 0.7925657725334168 | 0.7658526813983917 | 7.135210094451904 |

| llava:llava-l1-70pct | 8bit | 52.62113380432129 | 6988.41796875 | 7063427072 | 9.434726128578186 | 186.64 | 0.055651720311999414 | 0.28151951839707984 | 0.06768345847496432 | 0.011341040562006157 | 0.05227517343387794 | 0.7450437998771667 | 0.7934505808353424 | 0.7677844369411468 | 8.393464035987854 |

| llava:llava-l1-70pct | 4bit | 13.745697021484375 | 3746.41796875 | 1964201984 | 5.023973126411438 | 182.58 | 0.05041976806845838 | 0.25275233267951147 | 0.06489356494709586 | 0.011231128650576908 | 0.05086470529308464 | 0.7338869082927704 | 0.7920506286621094 | 0.7613548827171326 | 8.817115292549133 |

| llava:llava-glu30-heads30 | fp16 | 100.98091626167297 | 9715.91796875 | 5093939200 | 1.0862100791931153 | 219.48 | 0.07599619975896582 | 0.09070476496800528 | 0.13313607941776823 | 0.021859716366717075 | 0.09542863425169751 | 0.8208302640914917 | 0.7977265894412995 | 0.8084229874610901 | 83.0324315071106 |

| llava:llava-glu30-heads30 | 8bit | 10.770886182785034 | 5110.16796875 | 5093939200 | 4.284811911582946 | 225.74 | 0.08452705213889246 | 0.09851201615686041 | 0.15191933030926744 | 0.025718583864508683 | 0.10821157030985261 | 0.8293434298038482 | 0.7996419775485992 | 0.8139288914203644 | 90.46385460853577 |

| llava:llava-glu30-heads30 | 4bit | 10.823771715164185 | 2807.29296875 | 1471830016 | 2.334595727920532 | 189.14 | 0.0679720249389837 | 0.03992202738129364 | 0.11926591899778542 | 0.020031812050819185 | 0.08612631047355333 | 0.8249871873855591 | 0.7951509499549866 | 0.8093708050251007 | 118.5005710220337 |

| llava:llava-glu70-heads70 | fp16 | 52.23102784156799 | 4749.66796875 | 2490193920 | 1.9921028709411621 | 263.2 | 0.07404397876949137 | 0.2371579010214021 | 0.12686481320184506 | 0.01514453839468994 | 0.09118277426464053 | 0.7677023458480835 | 0.7936930477619171 | 0.7799117231369018 | 113.16414300918579 |

| llava:llava-glu70-heads70 | 8bit | 6.547288656234741 | 2627.04296875 | 2490193920 | 8.577187380790711 | 252.36 | 0.07485031812149327 | 0.19460088360767036 | 0.13119642690107486 | 0.0147698589609386 | 0.09238896173040614 | 0.7720016205310821 | 0.7929561460018157 | 0.7820047545433044 | 147.6547634458542 |

| llava:llava-glu70-heads70 | 4bit | 6.716195583343506 | 1565.73046875 | 820893696 | 4.494761400222778 | 291.88 | 0.07649965686575491 | 0.2712843417474439 | 0.14004057586836682 | 0.01618213063759447 | 0.1061228240780295 | 0.7710466825962067 | 0.7934619140625 | 0.7815650236606598 | 171.27051867485045 |